Summer Of Codex

Introducing GPT‑5 Codex: Agentic Coding, Outside ChatGPT

GPT‑5 Codex is a GPT‑5 variant optimized for agentic coding workflows. It is positioned as a code-first assistant and (at least for now) isn’t available as part of ChatGPT Codex.

What it is: A GPT‑5 build tuned for autonomous code reading, planning, and multi‑file edits

Why it matters: Faster refactors, end‑to‑end feature scaffolding, and tighter tool‑use loops for repos

What to watch: Access model, repository context limits, and whether it supports local toolchains out of the box

Seedream 4.0: Unified Image Generation + Editing

ByteDance announced Seedream 4.0, a next‑gen model that unifies image generation and in‑context editing in a single architecture. Results look strong, especially for fine‑grained edits.

Highlights

One model for both create and edit

Strong fidelity on local changes (textures, lighting)

Promising for iterative creative workflows

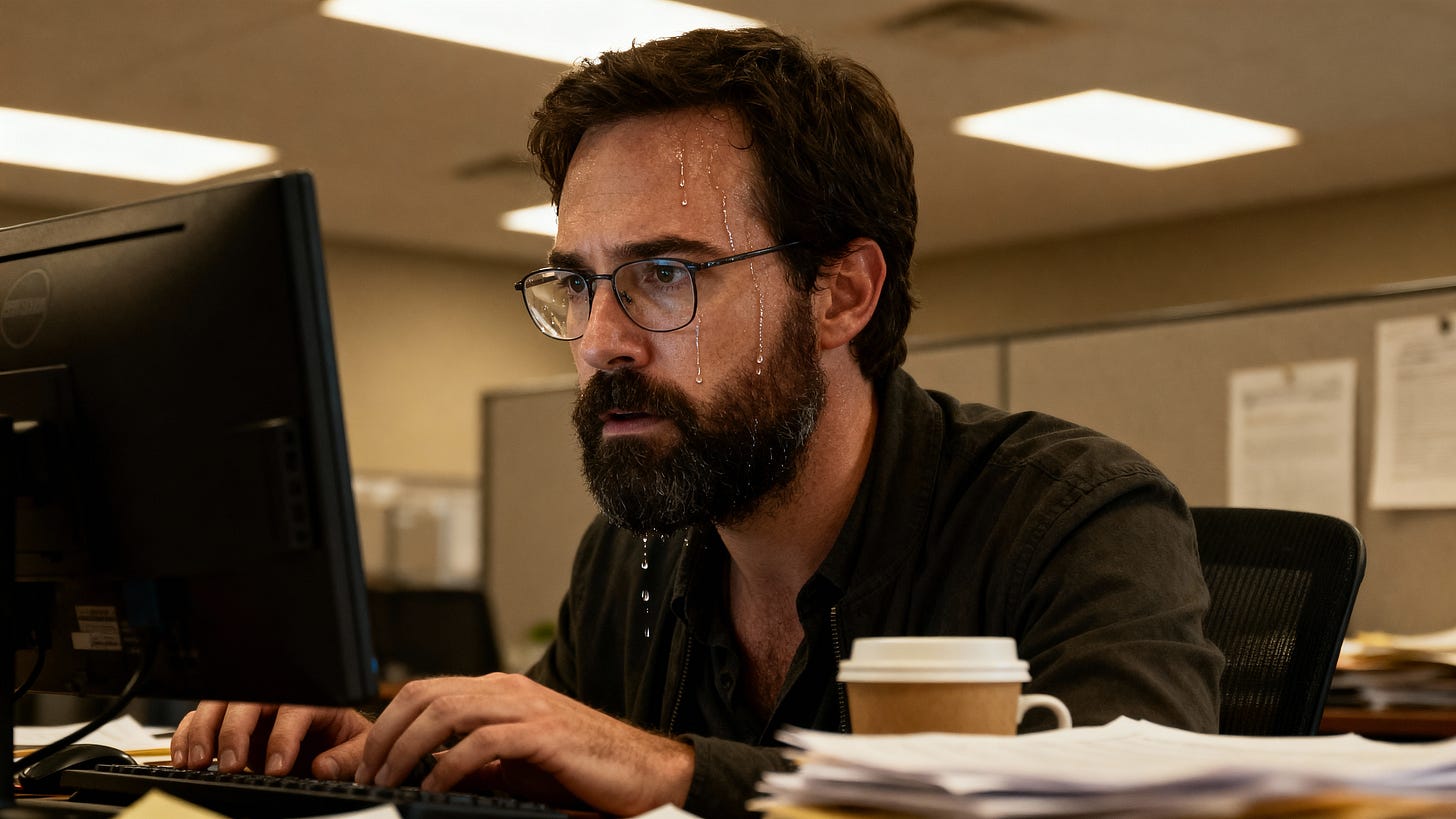

Quick take: Convincing realism, though some artifacts still show up under extreme lighting or moisture.

Claude Training Policy Change

Anthropic will begin training future Claude models on user data (including new or resumed chats and coding sessions) by default unless users opt out by late September.

Norms: This mirrors patterns we’ve already seen from OpenAI and Google

Control: Opt‑out is available in settings

Wishlist: A true “private session” mode like ChatGPT’s temporary chat would reduce friction when you don’t want logs used for training

Practical tip: If you handle sensitive client work, set org‑wide guidance now and verify default settings per workspace.

How People Use ChatGPT: New Paper

OpenAI published an analysis of real‑world usage patterns with several useful insights into how people prompt and iterate.

Link: How People Use ChatGPT [PDF][Read Here]

Why it’s useful: Grounded patterns you can borrow for onboarding and team training

Why Models Answer the Same Question Differently

Thinking Machines explores why LLMs can yield diverging answers to identical prompts, touching on sampling, context windows, and retrieval variance.

Read: Post by Thinking Machines[Read Here]

Takeaway: Small temperature or context differences often compound — standardize prompting and retrieval for consistency