GPT-5, Explained

“Hey there, Happy Tuesday! 👋 In this snack-sized issue, we are going over the GPT 5 release and some of our earlier thoughts.

GPT-5 Is Out

The day we've all been waiting for is here. I can't remember the last time I was so excited about a product launch that I added it to my calendar to watch the livestream. The consensus is that GPT-5 operates differently from previous models. Here are the major changes that have arrived. Even while putting this together we got more updates coming in.

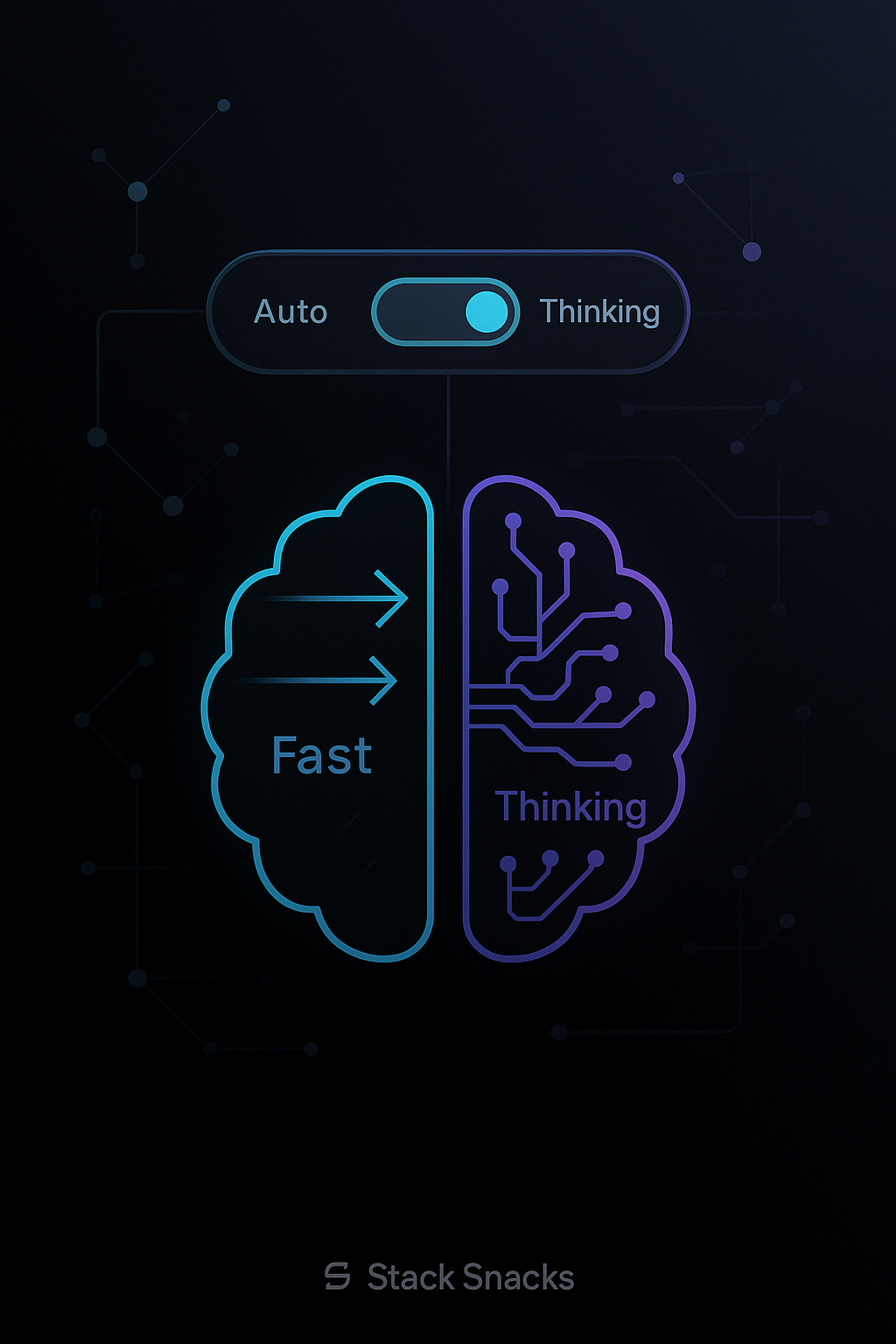

OpenAI's new flagship integrates reasoning directly into the default ChatGPT experience and introduces a "unified system" that determines when to provide quick answers versus deeper thinking.

GPT-5 isn't just one model—it's a system with two core brains: a fast, high-throughput model for most queries and a deeper "thinking" model for harder ones. A real-time router sits in front and decides which path to take based on the conversation type, complexity, tool needs, and even your explicit intent (e.g., if you type "think hard about this," it biases toward the deeper model)

Now, for most people who have never used a reasoning model or even know what I am talking about. The biggest change for you to get the most out of GPT-5 right now might be to ask it to “think hard” on whatever your prompt is.

GPT 5 API Details

There are a lot of models now to chose in the API and you can see the breakdown here. There are 4 different models for developers.

gpt-5 — best for complex, multi-step work (coding, analysis, agent tasks).

API context: up to 272K input + 128K output (~400K total).

Pricing (per 1M tokens): $1.25 input • $10.00 output • $0.125 cached input.

Controls:

reasoning_effort(incl.minimal) andverbosity(low|medium|high).

gpt-5-mini — faster/cheaper for well-defined tasks.

API context: up to 272K input + 128K output (~400K total).

Pricing (per 1M): $0.25 input • $2.00 output • $0.025 cached input.

gpt-5-nano — high-volume, ultra-low-latency use.

API context: up to 272K input + 128K output (~400K total).

Pricing (per 1M): $0.05 input • $0.40 output • $0.005 cached input.

gpt-5-chat-latest — chat-optimized; prioritize speed over deep reasoning.

API context & listing: see model page; billed like GPT-5 for input/cached input.

Notes: in ChatGPT, GPT-5 uses a router that can choose a non-reasoning chat path for lighter asks.

Launch & Updates

Launch weeks are always bumpy, and GPT-5 was no exception. That’s one reason we waited before doing a deep dive: there was a lot to absorb, and we wanted to let both us—and OpenAI—settle into the new setup. Early on, some users ran into rate limits and other hiccups, and reactions across the community were mixed.

While I was drafting this, an important update landed from the Chat team:

Updates to ChatGPT: You can now choose between “Auto,” “Fast,” and “Thinking” for GPT-5. Most users will want Auto, but the additional control will be useful for some people. Rate limits are now 3,000 messages/week with GPT-5 Thinking, and then extra capacity on GPT-5 Thinking mini after that limit. Context limit for GPT-5 Thinking is 196k tokens. We may have to update rate limits over time depending on usage. 4o is back in the model picker for all paid users by default. If we ever do deprecate it, we will give plenty of notice. Paid users also now have a “Show additional models” toggle in ChatGPT web settings which will add models like o3, 4.1, and GPT-5 Thinking mini. 4.5 is only available to Pro users—it costs a lot of GPUs. We are working on an update to GPT-5’s personality which should feel warmer than the current personality but not as annoying (to most users) as GPT-4o. However, one learning for us from the past few days is we really just need to get to a world with more per-user customization of model personality.

— Link to tweet: https://x.com/sama/status/1955438916645130740

One surprise on launch day was seeing many prior models disappear from the picker. As a heavy o3 user, it was jarring to watch a daily driver vanish. The good news: they’ve returned (at least for now). I’m glad to have them back so I can test prompt migrations to GPT-5 while keeping familiar options available. It’s encouraging to see OpenAI respond quickly to feedback.

Thoughts

I’m still getting used to this release—and that feels odd to say out loud. The Auto router should help a lot once it clicks. I’ve been in situations where I used 4o but wished I had o3, and other times when I asked o3 a trivial question and watched it spin up deep reasoning that wasn’t needed. If Auto consistently routes simple prompts to “Fast” and harder ones to “Thinking,” that should save time and friction.

It does feel different, though, so I’m giving myself space to adjust: updating prompts, tweaking workflows, and seeing where GPT-5 slots in best.